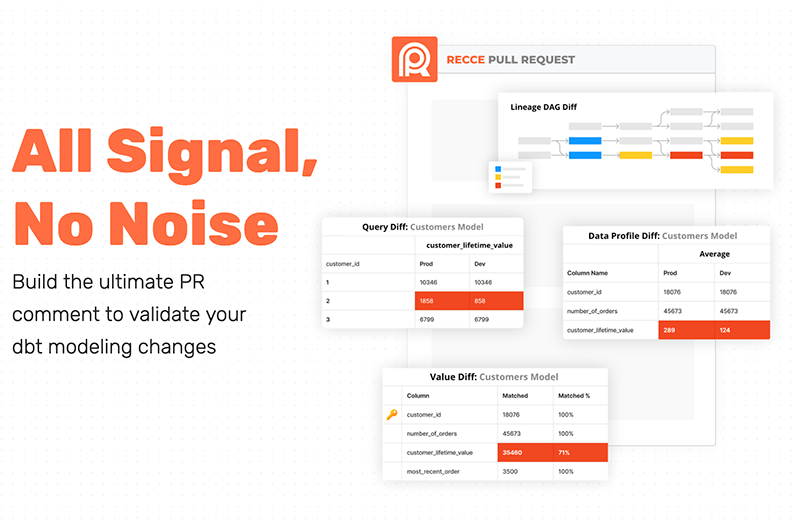

Hands-On Data Impact Analysis for dbt Data Projects with Recce

dbt data projects aren’t getting any smaller and, with the increasing complexity of DAGs, properly validating your data modeling changes has become a difficult task. The adoption of best practices such as data project pull request templates, and other ‘pull request guard rails’ has increased merge times and prolonged the QA process for pull requests.

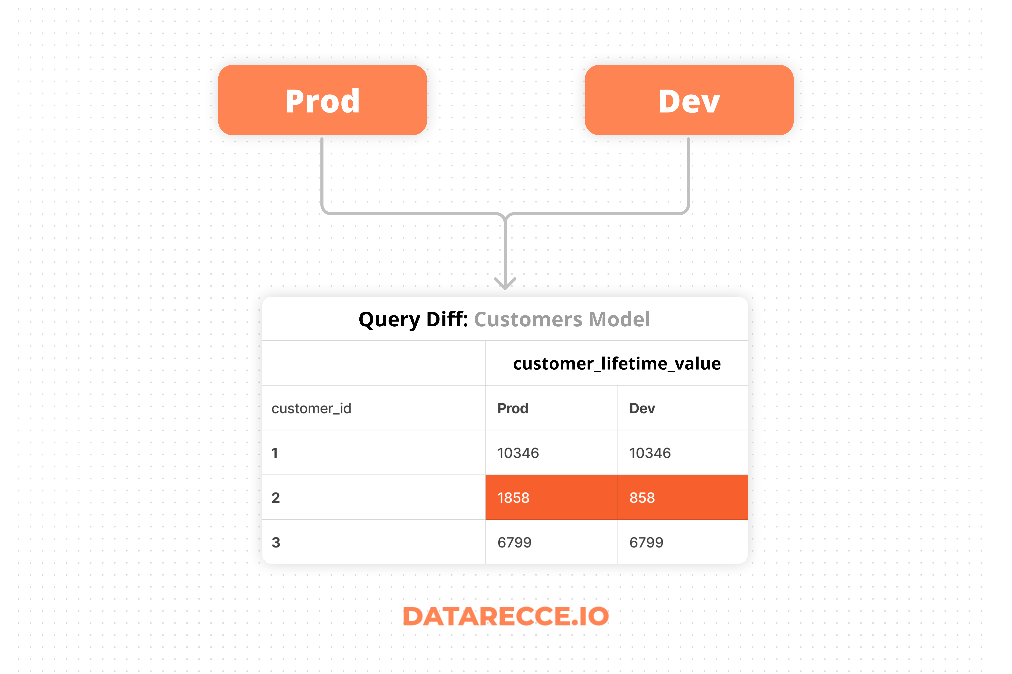

The difficulty comes from your responsibility to check not only the model SQL code, but also the data, which is a product of your code. Even when code looks right, silent errors and hard to notice bugs can make their way into the data. A proper pull request review is not complete with data validation.